VR is a weird place. When we close our eyes and imagine being somewhere else, we mostly think of it as our surroundings being different. Putting on a headset allows us to change our surroundings to scores of possibilities. It’s a lot like the holodeck in Star Trek. It doesn’t seem real though. There are several reasons why virtual reality, even at its best, is such a poor substitute for actual reality. How close are we to bridging these gaps? Read on for more thoughts.

[vrv-table-of-contents minLevel=’h2′ maxLevel=’h3′]

Graphics and Sound

Even in the highest quality experiences, I’ve never been able to suspend disbelief. There are so many tiny things that make reality so… real. It’s the fact that there’s clutter everywhere, like loose leaves and litter outside, and papers and random belongings inside. Surfaces have fine texture details, discolorations, reflections, shadows, scratches and dents. If something falls, it makes specific noises, it lands as your brain expects it to, and cracks or breaks as appropriate.

Computer graphics have come so far over the past few decades. I’ve seen computer graphics demos that truly are indistinguishable from reality. There are two important caveats though. First, demos are usually enjoyed by watching a YouTube video. If you try to run them on your own machine, it will likely need to dial down many of the best details in order to display anything. Second, these realistic demos are incredibly carefully tailored for specific environments. All of the imperfections in the scene that help convince our brain need to be explicitly added or captured photogrammetrically.

I would argue that it might be possible to overcome many of these challenges for a world explicitly eschewing them, such as an animated or other-worldly setting. If your chosen reality is consistent wherever you look, it goes a long way towards convincing you. If you can get close to things and see minor details, or open and close things as you expect, it starts to feel more real.

It’s way too common to have frames stuttering or freezing in complex scenes. That’s pretty unforgivable from a perception point of view. It will be some time until we have computer graphics in VR as sharp and smooth as real-life, but there is definitely progress being made in that realm. Unfortunately, many of these advancements are right at the edge of current technology and require still more work to make it to the stereoscopic immersive views of virtual reality. Headsets like the Quest 2, which perform all of the computation themselves (as opposed to linking to a computer to render the imagery) are unlikely to ever achieve such realism, at least with current chips being used.

Movement

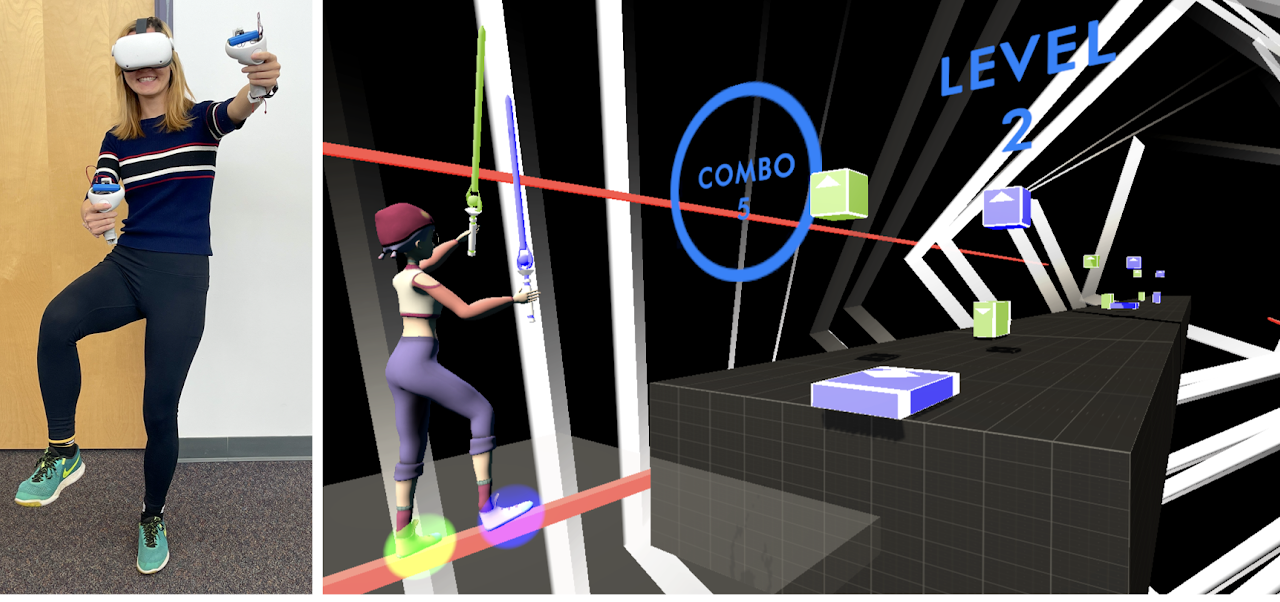

Even the best environment is always compromised by the fact that I can’t really move in it. My play space is 2×3m or so, which allows me to take a few steps in any direction before hitting the “guardian” border. It’s definitely better than needing to stand in place, but not by much. There are three methods of movement in VR, each with its own pros and cons.

Thumbstick-based movement

This method feels like playing a game. Every game system controller has some form of thumbstick and/or thumbpad to move around. This never bothered me in two-dimensional worlds, but in the world of VR it makes me sick to the stomach. It’s a smooth quick continuous gliding movement without the accompanying inner ear sensations. Even in real life, the smooth powerful acceleration in an electric car can induce a similar feeling. I’ve played a few games that mitigate the feeling with slower movement and a small amount of “bounce” to it to feel more like steps. There are also some games that let you swing your arms in place to trigger movement. Finally, if the view is restricted a bit while moving (like a heavy vignette), it’s also helpful. You can squint your eyes while gliding to get a similar benefit. None of these tricks fix it for me, but they can help.

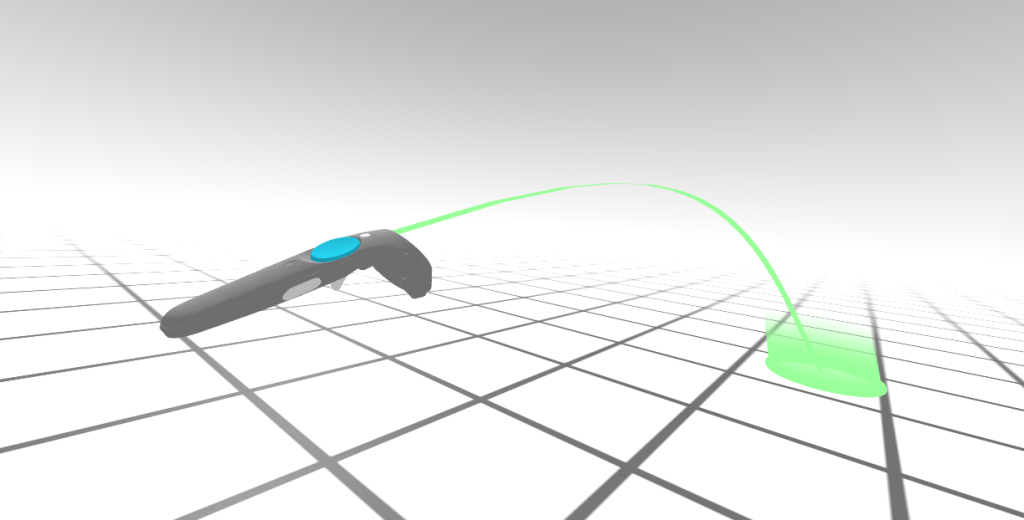

Teleportation-based movement

Since continuous movement can cause discomfort, thankfully most experiences support jumping to spots via teleportation. While this solves the issue of brain/body disconnect, it completely breaks the immersion. If it wasn’t for being able to teleport, I’d probably have given up on VR some time ago. Realistic or not, it works well. I would imagine that even people who tend to use the continuous movement still teleport at times as well.

Feet-based movement

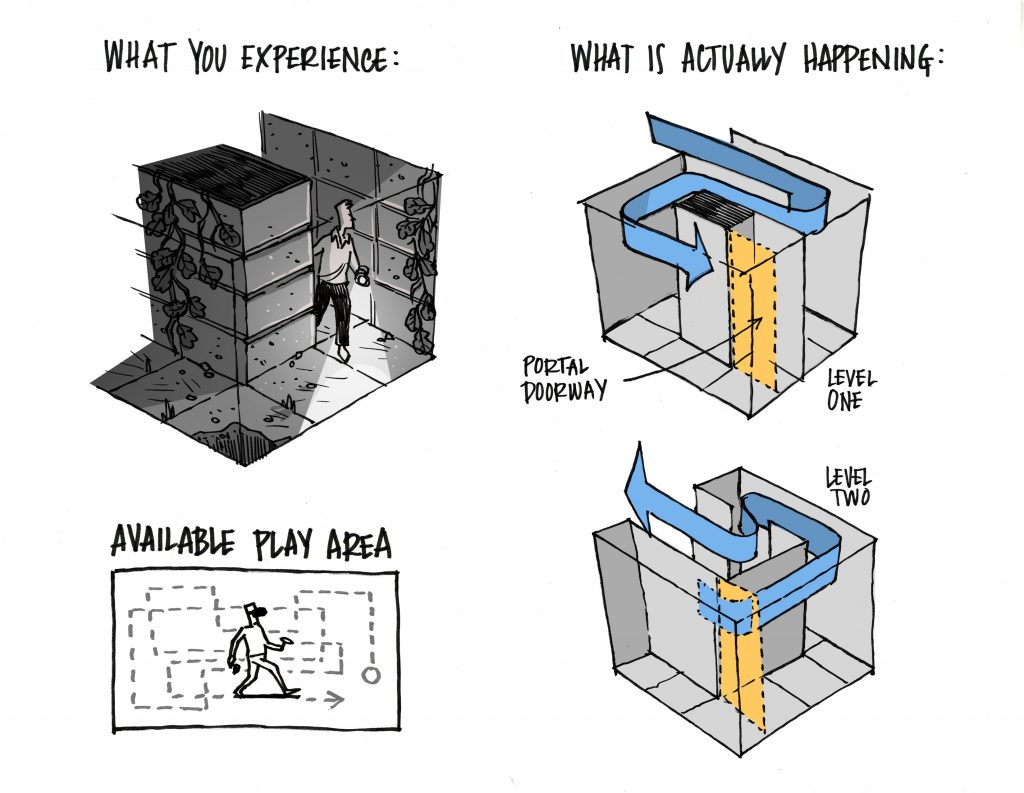

Assuming you are able, the best way to move in virtual reality is by using your feet. You can walk around and really feel like you’re exploring the space. Of course, it doesn’t work so well with uneven terrain since your floor is likely pretty flat. The main issue with physically walking is your limited space. Once you hit the border (and trigger the guardian borders to appear), all you can do is change direction. If the experience lets you “quick turn” to pivot 90°, you can both virtually and physically turn around, then keep walking to approximate infinite distance. Of course, walking back and forth in your space is a great way to cause problems with your headset cable if you have one!

There have been experiences that have tried to work around this by basing the environment on your real-world space. This requires an environment that can be reconfigured, since the virtual walls are generated based on your boundaries — not a good choice for recreations of real places. In these specially generated scenes, everything is arranged to keep you within the space in a more natural way. I’ve wondered if there’s some way to trick the brain into basically walking in circles based on a skewed perspective in your headset, but since I haven’t seen it done yet, I’m assuming it wouldn’t work.

Hardware

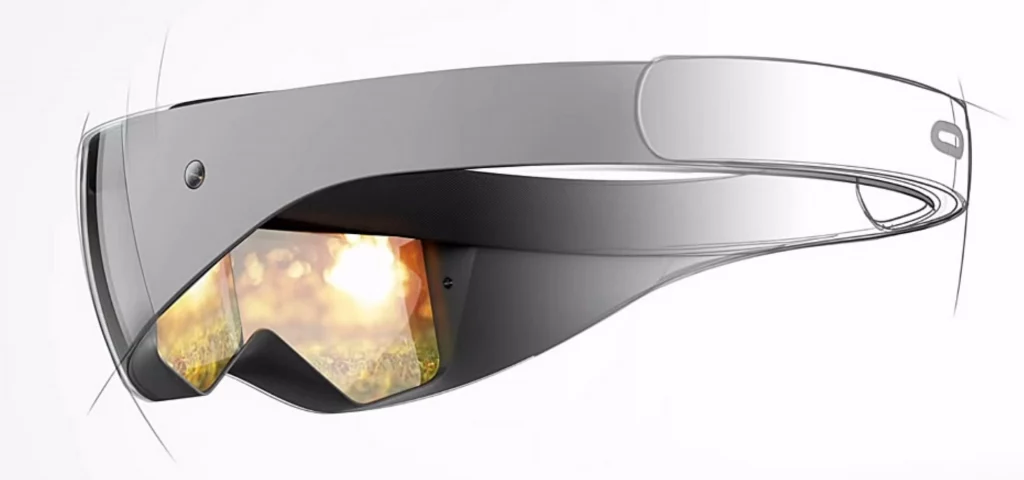

VR hardware is always going to be a limitation to work around. At the bare minimum, you need to wear a chunky headset. This adds weight to your neck, often slides around requiring readjustment for fit or focus, can cause sweat on your face around the lenses, and despite all the tweaking, still ends up like you’re looking through a pair of binoculars due to the field-of-view.

The ideal would be very lightweight headsets more like ski goggles or even a regular pair of glasses. Because of the requisite electronics, I think we’ll be to the goggles stage before standard glasses, but either option would be so much better. The dream headset would be light and would combine augmented reality (overlaying virtual objects in your real vision) and full virtual reality (completely replacing the real world with a virtual scene). You could leave the glasses on even when not in VR, they could show notifications or contextual information when needed, and switching to VR mode would happen for apps that needed it. You could watch Netflix projected onto the side of your house, then completely replace your environment with a trip to Pompeii.

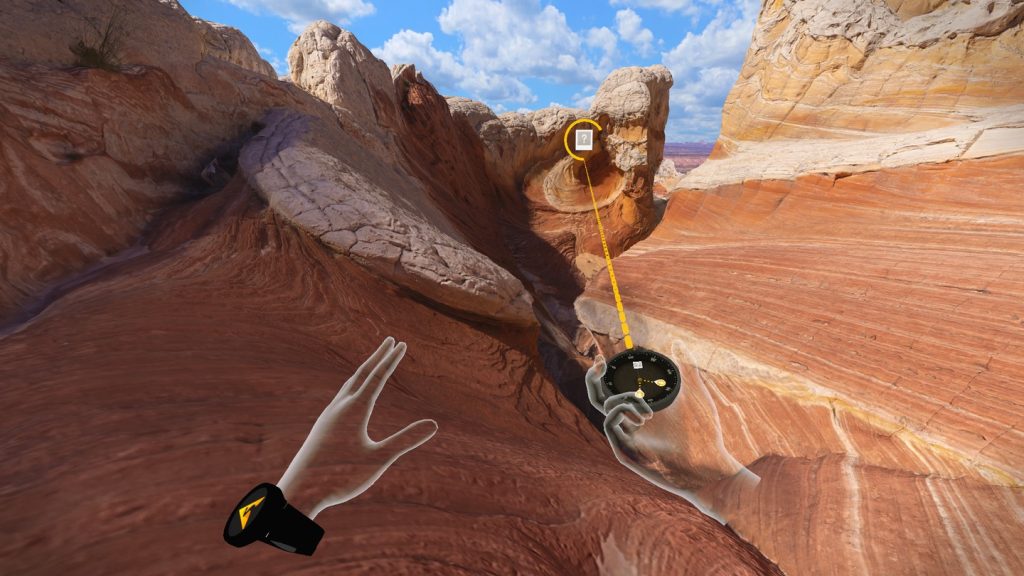

For now, not only do we have clunky headsets we also have controllers to break reality. You need to remember which controller has A and B vs. X and Y, use the thumbstick to move, squeeze the trigger button to pick something up, and activate the menu with another button. Better experiences let you interact using in-app natural controls such as a virtual wristwatch. I love just turning my wrist and seeing indicators and buttons that integrate with the experience. It just feels so much more natural. Unfortunately, in order to do this, we still need to hold controllers since they are what enable the headset to track where our hands are.

Determining controller position with some systems (HTC Vive, Valve Index) requires you to setup tracking stations around the room. This yields high-quality results but is more expensive and complicated. Thankfully, Facebook/Meta has been doing research on direct hand tracking. This uses the cameras in the headset to identify where your hands are, including positions of your fingers. It’s considered an experimental feature at this point, but already shows great promise. It likely won’t be good enough for games, which often require a large set of controls, but for non-gaming applications like we cover on VR Voyaging, this could be more than adequate.

Avatars and Bodies

I feel like everything so far has made a good case for why virtual reality still isn’t reality, but I also feel like all of them are only second-place to the disembodied feeling we get. For many experiences, you are a floating pair of eyes with floating hands in front of you. I’m not sure about you, but this is very different from how I usually see myself.

When I’m transported to a mountain top, I look in every direction. Foolishly, I expect there to be a body attached to me. If I look down, I should see my shoulders, arms, chest, legs, etc. Without those, I’m floating in a dream. I’m not anchored to the ground. Why must it be this way?

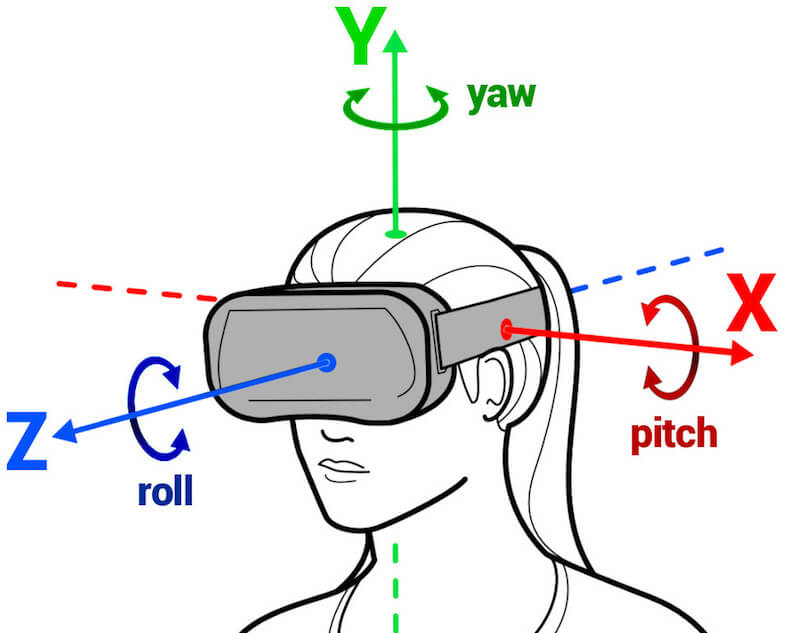

To understand why, you need to understand how tracking works to begin with. First, there is no body tracking. There is only headset and controller tracking. The headset has built-in sensors to detect orientation as pitch (x-axis), yaw (y-axis), and roll (z-axis). Tracking only orientation is also called three degrees of freedom, or “3DOF.” The very first headsets were only able to do 3DOF, and this is how simple smartphone VR setups like Google Cardboard work as well. This is ok for turning your head to look around a 360° video, but if you take a step in any direction, the scene doesn’t reflect that movement, so it starts to feel disorienting.

Inside-Out Tracking

In order to figure out when you move your body, there are two methods of tracking. Inside-out uses cameras in the headset itself. The Meta Quest 1 and 2 and Windows Mixed Reality headsets use this method. It simple compares the image from the camera from one frame to the next to see if it appears to be moving up and down, left and right, or forward and backward. It’s very clever computation that would have been unthinkable to do in real-time (as you move) not too many years ago. Adding these three dimensions of movement to tracking is called six degrees of freedom, or “6DOF.”

The second part of inside-out tracking relates to the controllers. Just like with the headset, there are sensors to detect tilt and rotation embedded inside. This would enable the system to detect how you are holding the controller, but not where. One solution to figure out the where could be to embed a camera inside the controllers just like with the headset. These would look out and see how the visuals move around from frame to frame. This isn’t currently done by any commercial system, but there is research that looks promising. This also has the enormous benefit of being able to perform full-body tracking — really the holy grail of immersion.

The current low-cost solution for tracking controllers is to have the headset do the work (Oculus/Meta Quest, Windows Mixed Reality). Controllers with rings on them typically emit a special pattern of infrared light. The headset uses the visibility of these rings to determine where in its field of vision they are. You might think the controller tilt and rotation sensors would be unnecessary, but they serve two purposes. First, they allow additional data points for better accuracy. Second, they help to augment tracking when your hands are out of sight of the headset cameras, such as when you turn your head far to the side or reach behind yourself.

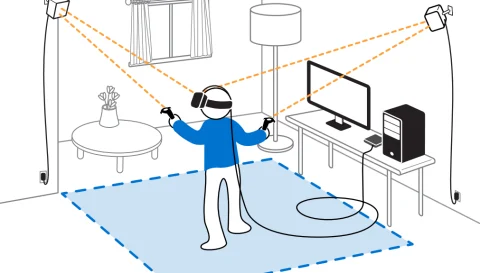

Inside-Out Tracking with Reference Points

Another method of inside-out tracking relies on a set of laser emitters (called base stations) around your play space. This is used with the HTC Vive, Valve Index, and several others. Instead of using cameras to analyze every frame, the headset and sensors contain trackers that look for laser beams sweeping side-to-side and top-to-bottom. With several base stations mounted on walls or poles doing this, location can be determine based on the exact moments that the trackers detect the laser pulses. Since the process requires line of site with the stations, there must be considerations for occlusion, when the pulses are blocked by the position of your arms or the rest of your body. This necessitates at least two tracking stations to reduce misses, and realistically three or even four are needed for larger areas. (edit: thanks to Rogue Transfer in the comments below for some corrections)

Outside-in Tracking

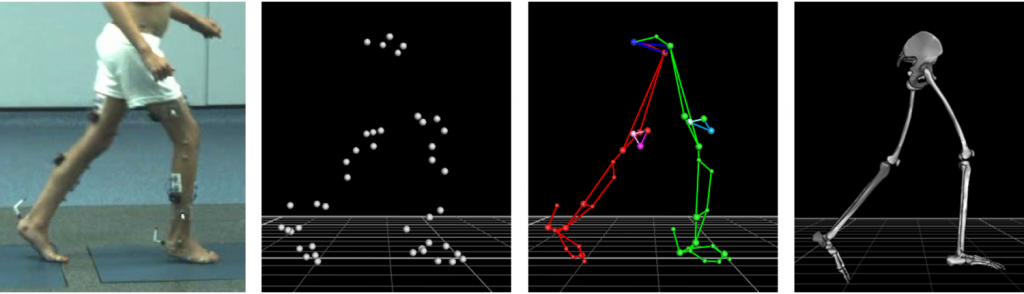

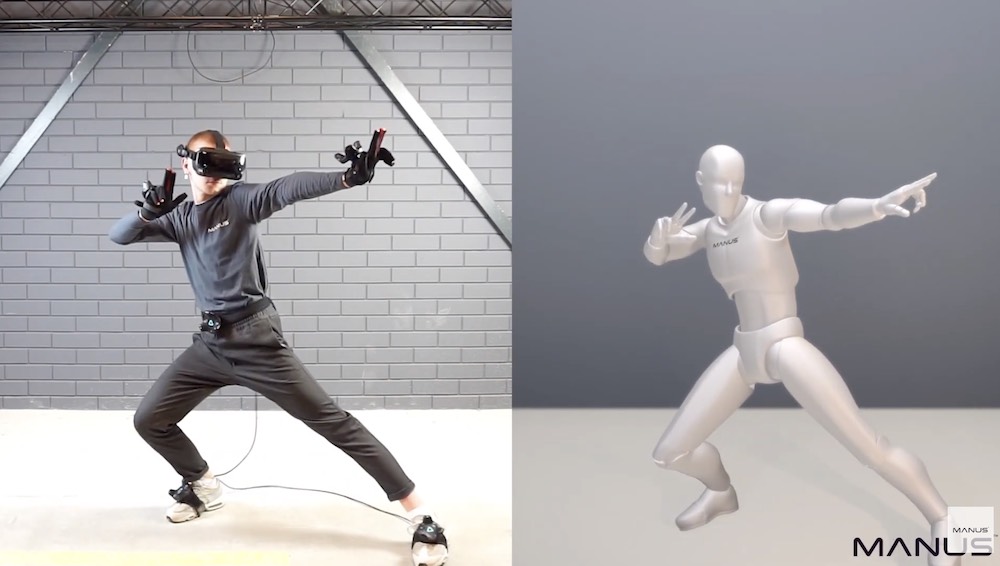

The final type of tracking in use (albeit not very common in the home) is using a camera or similar device outside of your place space to track where you are and/or how your body is posed. You’ve almost certainly seen motion-capture for movies and television. This uses white balls or other markers affixed to the body. A camera records the person moving, then uses the positions of the markers to calculate the pose.

You can achieve a similar effect using a depth camera to calculate body shape and pose. This is how the Xbox Kinect worked. These are actually pretty good and would be a good choice for apps that don’t require a high level of precision. They don’t work very well if you have an arm behind your back, but if it’s not a fast-paced game it’s not really a problem.

Using markers on different parts of your body lets you build up a skeleton rather than just the three points of the headset and two controllers. Markers on your elbows, knees, waist, and feet provide a pretty detailed view, but add quickly to the cost and complexity of your setup since the you either need the outside-in camera or you need fancy trackers or sensors. For balls/markers a pretty standard camera will suffice. For laser-pulse trackers ($100 each), you need the base stations ($150 each). Active sensing trackers are completely self-contained but aren’t very precise. They have their own orientation sensors and make some assumptions about where they are based on the physics of the human body (if the foot is here, then the leg can’t be there). They all have the advantage of bringing your body into VR.

The Future

Even with the limitations, VR can be a lot of fun. It will take some time to get to a level of realism that fools the brain, but it still offers the ability to surround yourself in a location and experience it at full-scale, something impossible on a flat screen or in photographs. The rate of progress is exciting, and many complaints are already resolved, just not affordably yet. In another few years I think we’ll have a very different experience. I’m pretty excited about what’s in store for virtual reality. How about you? Sound off in the comments!

Further Reading

- Manus, company working on full-body tracking

- ControllerPose: Research on controller-mounted cameras

- CNN: VR leg tracking research

- CNN: No legs in VR

- Using electromagnetic sensors for pose tracking/estimation