With so much focus on capturing environments, it seems important to cover the various methods and their pros and cons. Today we will answer the question, “What is LiDAR?”

Definition: LiDAR is an acronym standing for Laser imaging, Detection, and Ranging. It’s also referred to as 3d scanning or laser scanning.

When capturing a real-world scene, there are multiple ways to do it depending on your intended use. You can always just take a picture of course, but that’s a very limited view. A second photo from a few inches to the side will provide a 3d image. Nice, but still pretty limited. Next, you can use a special lens to take a 180° photo, or several to take a 360° photo.

What if you want to allow someone to walk around what you’ve captured? Using photogrammetry, you can take hundreds of photos from every angle, then use a computer to stitch them together into a 3d space. This is very powerful, but it’s not the most precise method of capture.

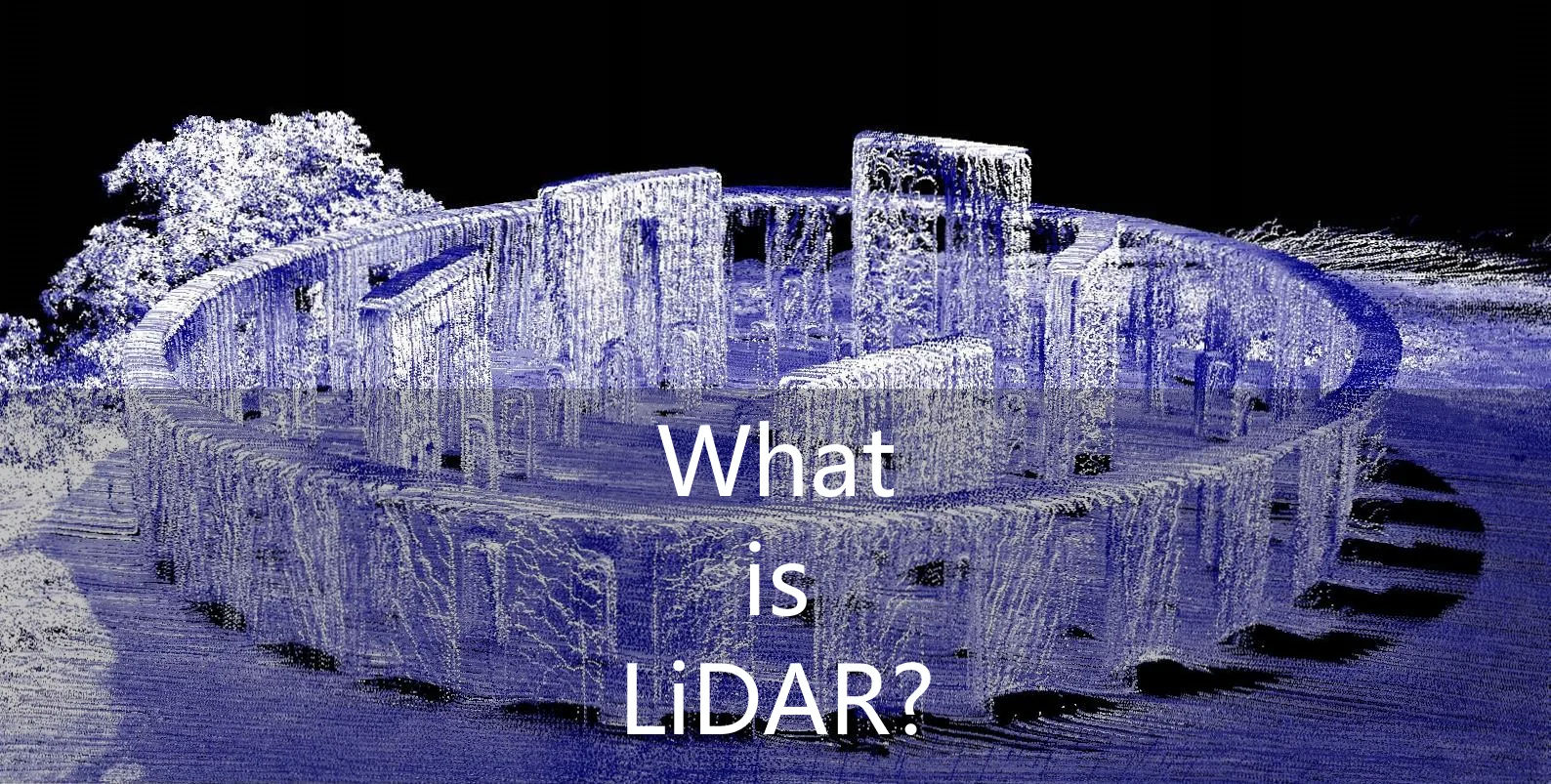

Point Clouds

Instead of optically capturing the scene with a camera, you can record a point cloud using a tool called LiDAR. At its core, LiDAR is a very simple technique that just shoots a low-power laser and measures how long it takes to bounce back. That amount of time translates to distance (based on the speed of light), so from the point of view of the camera/sensor, you now have the location of a point. By spinning the sensor around and moving it up and down (usually done with mirrors), it can measure the entire sphere around it. If you look at these raw points, they aren’t very solid, hence the term point cloud.

Since each of these points can have millimeter precision, this is much more useful for world heritage preservation and scientific study. You can tell the exact distance between points, true elevation, and even record cracks and holes in things, something photogrammetry can struggle with. Another use case of LiDAR is for autonomous cars. Being able to accurately measure distance from the car to objects and people is very important.

Point clouds can also be captured using volumetric cameras. These combine a depth camera with an optical camera. Unlike LiDAR, it simply records the distances to points from a single point of view. It’s not as accurate or detailed, but it has its place too.

Using the data

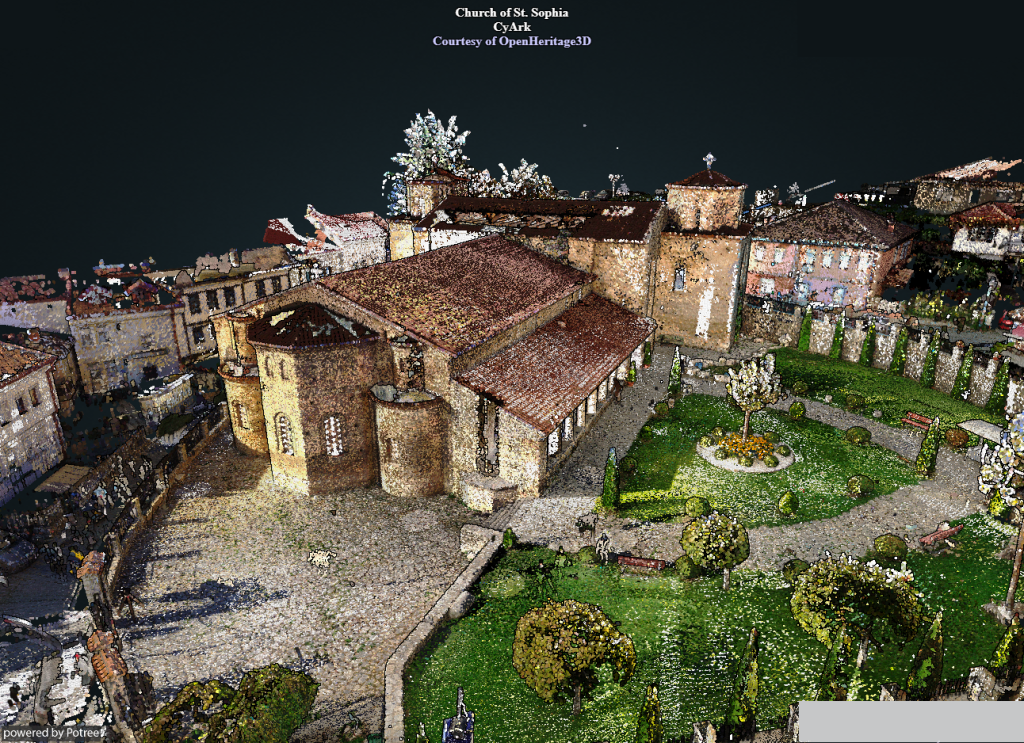

The raw data resulting from LiDAR isn’t as pretty to look at as photogrammetric data, but computer algorithms can make assumptions to connect points and even combine the points with color data captured at the same time. It’s also important to capture data from several viewpoints to handle occlusion (objects being blocked) and to correlate the points. Drones or aircraft can help to capture enough angles to avoid missing anything. In the end, you can end up with a result almost as visually detailed, while being highly accurate for other uses.

LiDAR in Virtual Reality

Many VR experiences use a combination of photogrammetry and point clouds from LiDAR for the highest quality. Even still, the 3d models that are generated from all of this data still needs to be tweaked by hand for the best results. Perhaps, in a few years this step will no longer be necessary. Until then, capturing locations requires the money for the right equipment, time, and the skills to know how to put it all together. Even with all of that, there are plenty of excellent apps like Blueplanet, BRINK Traveler, and OtherSight that let you explore amazing scenes. I’m excited to see where things go in the next few years!